Beyond the hype: can Artificial Intelligence be ethical?

To save or not to save the ladybird? That is the question.

In the same room there are a cute red ladybug and a super-technological Roomba, the robot vacuum cleaner that is revolutionizing the way people do housework. On the one hand, a living being that, although small, still remains a living being (besides playing a very important role in the ecosystem!). On the other, an autonomous machine that has the clear mission of cleaning up the entire house before its “human occupants” come back home. Roomba is equipped with numerous onboard sensors detecting both dangers (stairs, for example) and foreign bodies on the floor (dust and various objects), and acts thanks to a particular technology that defines the navigation logic within the room.

And here comes the dilemma: if Roomba found a ladybug on its path, what should it do? Continuing on its way and proceeding with the cleaning, regardless of the poor ladybug, or going around the obstacle and allowing the little insect to escape from certain death? If it had been a spider, maybe we wouldn’t have minded the first option, but the question here is about a beautiful ladybug – that, by the way, brings luck! Faced with this situation, Roomba should also make decisions based on moral and ethical principles. So what?

This is an example, maybe a little trivial and simplistic, that introduces us to the ethical questions – far more important and far from simple! – related to the technology we are experiencing today and that derive from three mega revolutionary trends. One of them is the Artificial Intelligence, here meant as the technology allowing us to interpret both structured and unstructured data on a large scale, automatically, and that plays an increasingly important role in our society and economy of today.

Humans vs. Algorithms

Not long ago we have heard about the landmark study by the American company LawGeex, which opposed an algorithm based on Artificial Intelligence to a group of twenty lawyers with decades of experience in corporate law and contract review. The goal of the challenge consisted in spotting issues in five NDAs (the contractual basis for most business deals) in the shortest time possible and with a sufficient level of accuracy. The result? It took the lawyers an average of 92 minutes to complete the task, with an accuracy rate of 85%. The LawGeex AI won the challenge in a landslide victory: in just 26 seconds, it identified the issues with an accuracy rate of 94%.

What goes around comes around

However, there are some precedents. It already happened during both chess and poker matches: Artificial Intelligence triumphantly won several times when challenged against human intelligence. However, chess and poker are both games where there are rules that are easy to formalize and “teach” to a machine. On the contrary, the result of the news reported above – unimaginable up to two years ago – is truly surprising because the interpretation of the law is not so simple to systematize! So, the real news is not the one seeing top corporate lawyers pitted against an algorithm, but rather the implicit news underlining the huge step forward in the automation of analysis and interpretation processes of unstructured data (which, I remind you, differ from structured data in that it is not easy to process because stored without any scheme).

An important question still remains unsolved, though. When AI takes on such typically human tasks, a moral question spontaneously arises:

can a machine make ethical decisions? If so, based on what? Who defines what is ethical and what is not for the machine?

The truth is that AI systems are not – and cannot be – neutral: a decision made by an AI system reflects the way in which that system was programmed or trained by the person who created it. In other words, it reflects the values, cultural aspects, and opinions of whoever designs, implements and configures it.

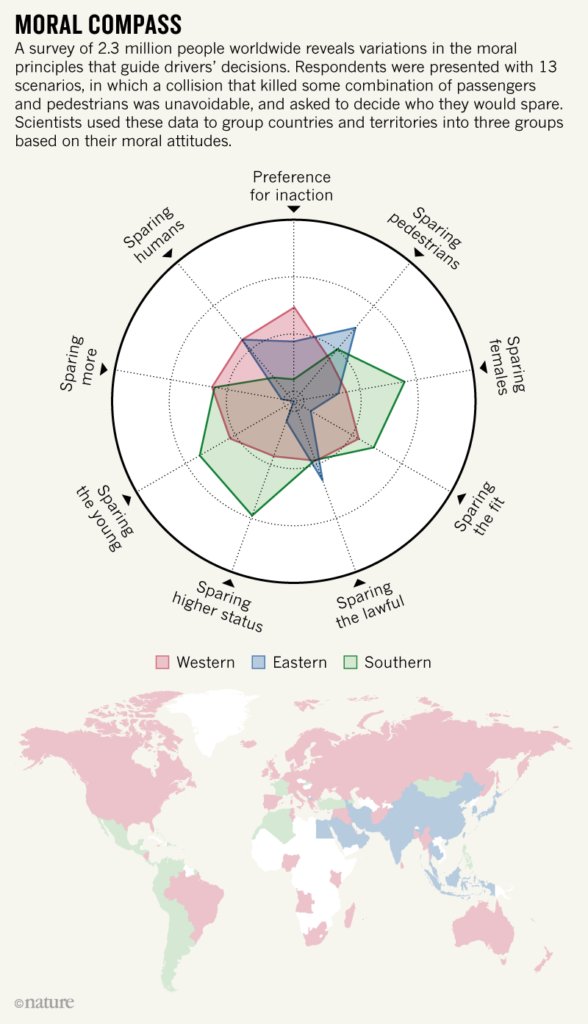

A significant contribution to these studies comes from the famous Moral Machine experiment, in which a group of researchers asked millions of people from all over the world an ethical question: what should a self-driving vehicle do, with passengers on board, in a situation where every decision leads anyway to loss of life? Run over the old woman and the runner or the mother with the little child? The businessman and an elderly man or a thief, a dog and a young couple? The experiment brought to light the fact that all decisions made by an individual reflect the value system of her culture.

For example, for many Eastern cultures the role of the elderly in the social structure is much more important than in the West, and therefore this group was – in the experiments – “saved” more often. Similarly, in Central America, South America and in former French colonies, there was the tendency to save women more often. The results of this study prompt therefore the following question: shall we codify algorithms with different ethical rules according to the different geographic markets, or design and apply a universal standard, which is a compromise of all the differences found in diverse cultures?

If the man who designed Roomba had taught the robot to recognize insects and trained him to go around in case he found them on the floor, then the story of the poor ladybug could have a happy ending.

The wrong recipe

There is however another aspect that makes AI systems impartial, called data bias. As mentioned above, AI systems are programmed and trained by humans; this “learning” phase is often based on the practice of exposing the system to a series of data. Let’s imagine, for example, that we want to teach an AI technology to recognize cats. We will then show a series of photos of cats, explaining “This is a cat” and a series of pictures with other subjects, clarifying “This is NOT a cat”. This practice works pretty well in some cases, but not for all, because if data used to train an AI is “biased”, well, the AI will also be “biased”.

Let’s give an example. In the United States, the judiciary uses an algorithm, called index of recidivism, for evaluating the probability that a certain convicted would commit other crimes in the future. This index is used, for instance, as a tool to support judges on the decision to release an accused on bail. The algorithm is based on a series of parameters referring to both the individual and the crime committed, and it uses the historical data of the American federal court system to estimate the probability of recidivism. The problem is that historical data shows a higher probability of recidivism for Afro-American individuals (because of a series of historical reasons that we will not discuss here). And therefore, for Afro-American individuals – today! – it will be more difficult to obtain freedom on bail. Is that a fair, acceptable thing?

In the end, this happens simply because an algorithm is a set of instructions describing how to perform a certain task. We should therefore blame ourselves, since the algorithm is only executing commands based on rules and data we provide it. The recipe for an apple pie is no different, except that it is written in words instead of code. And – to complete the similarity – it is as if we were telling a friend the recipe with the wrong doses, making his baking attempt fail.

So, what?

So, in the end the question is: how can AI systems be “ethically” designed? Of course, we do need to consider the enormous level of complexity due to the fact that morality and ethics are dependent on the social and cultural contexts in which decisions are made; diversity plays therefore a critical role in this field.

Today the commitment to ethical planning is increasing. An example among all is the European WeNet project, which pursues the objective of designing and implementing an AI-based platform enabling diversity-aware interactions between people belonging to different cultures, while avoiding any bias or statistical discrimination.

U-Hopper is collaborating with the project’s consortium to achieve this goal. The reason why we decided to participate is simple: while building an increasingly technological future, we need to keep in mind the fact that the way we design algorithms puts us in a position of great power, but also of great responsibility, to an extent never experienced before.

Email us at info@u-hopper.com to get in touch and receive more details about the project!

Did you enjoy this article?

Enter your e-mail address to receive our newsletter!

PS: don’t worry, we hate spam just as much as you do. We’ll send you just a couple of emails a year with a collection of the most interesting articles, it’s a promise!